The sitemap.xml file and the correct robots.txt for the site are two mandatory documents that contribute to the fast and complete indexing of all necessary pages of a web resource by search robots. Correct site indexing in Yandex and Google is the key to successful blog promotion in search engines.

I already wrote how to make a sitemap in XML format and why it is needed. Now let's talk about how to create the correct robots.txt for a WordPress site and why it is needed in general. Detailed information about this file can be obtained from Yandex and Google themselves, respectively. I’ll get to the core and touch on the basic robots.txt settings for WordPress using my file as an example.

Why do you need a robots.txt file for a website?

The robots.txt standard appeared back in January 1994. When scanning a web resource, search robots first look for the text file robots.txt, located in the root folder of the site or blog. With its help, we can specify certain rules for robots of different search engines by which they will index the site.

Correctly setting up robots.txt will allow you to:

- exclude duplicates and various junk pages from the index;

- ban the indexing of pages, files and folders that we want to hide;

- generally refuse indexing to some search robots (for example, Yahoo, in order to hide information about incoming links from competitors);

- indicate the main mirror of the site (with www or without www);

- specify the path to the sitemap sitemap.xml.

How to create the correct robots.txt for a site

There are special generators and plugins for this purpose, but it is better to do this manually.

You just need to create a regular text file called robots.txt, using any text editor (for example, Notepad or Notepad++) and upload it to your hosting in the root folder of your blog. Certain directives must be written in this file, i.e. indexing rules for robots of Yandex, Google, etc.

If you are too lazy to bother with this, then below I will give an example, from my point of view, of the correct robots.txt for WordPress from my blog. You can use it by replacing the domain name in three places.

Robots.txt creation rules and directives

For successful search engine optimization of a blog, you need to know some rules for creating robots.txt:

- The absence or empty robots.txt file will mean that search engines are allowed to index all content of the web resource.

- robots.txt should open at your site.ru/robots.txt, giving the robot a response code of 200 OK and be no more than 32 KB in size. A file that fails to open (for example, due to a 404 error) or is larger will be considered ok.

- The number of directives in the file should not exceed 1024. The length of one line should not exceed 1024 characters.

- A valid robots.txt file can have multiple statements, each of which must begin with a User-agent directive and must contain at least one Disallow directive. Usually they write instructions in robots.txt for Google and all other robots and separately for Yandex.

Basic robots.txt directives:

User-agent – indicates which search robot the instruction is addressed to.

The symbol “*” means that this applies to all robots, for example:

User-agent: *

If we need to create a rule in robots.txt for Yandex, then we write:

User-agent: Yandex

If a directive is specified for a specific robot, the User-agent: * directive is not taken into account by it.

Disallow and Allow – respectively, prohibit and allow robots to index the specified pages. All addresses must be specified from the root of the site, i.e. starting from the third slash. For example:

- Prohibiting all robots from indexing the entire site:

User-agent: *

Disallow: / - Yandex is prohibited from indexing all pages starting with /wp-admin:

User-agent: Yandex

Disallow: /wp-admin - The empty Disallow directive allows everything to be indexed and is similar to Allow. For example, I allow Yandex to index the entire site:

User-agent: Yandex

Disallow: - And vice versa, I prohibit all search robots from indexing all pages:

User-agent: *

Allow: - Allow and Disallow directives from the same User-agent block are sorted by URL prefix length and executed sequentially. If several directives are suitable for one page of the site, then the last one in the list is executed. Now the order in which they are written does not matter when the robot uses directives. If the directives have prefixes of the same length, then Allow is executed first. These rules came into force on March 8, 2012. For example, it allows only pages starting with /wp-includes to be indexed:

User-agent: Yandex

Disallow: /

Allow: /wp-includes

Sitemap – Specifies the XML sitemap address. One site can have several Sitemap directives, which can be nested. All Sitemap file addresses must be specified in robots.txt to speed up site indexing:

Sitemap: http://site/sitemap.xml.gz

Sitemap: http://site/sitemap.xml

Host – tells the mirror robot which website mirror to consider the main one.

If the site is accessible at several addresses (for example, with www and without www), then this creates complete duplicate pages, which can be caught by the filter. Also, in this case, it may not be the main page that is indexed, but the main page, on the contrary, will be excluded from the search engine index. To prevent this, use the Host directive, which is intended in the robots.txt file only for Yandex and there can only be one. It is written after Disallow and Allow and looks like this:

Host: website

Crawl-delay – sets the delay between downloading pages in seconds. Used if there is a heavy load and the server does not have time to process requests. On young sites it is better not to use the Crawl-delay directive. It is written like this:

User-agent: Yandex

Crawl-delay: 4

Clean-param – supported only by Yandex and is used to eliminate duplicate pages with variables, merging them into one. Thus, the Yandex robot will not download similar pages many times, for example, those associated with referral links. I haven’t used this directive yet, but in the help on robots.txt for Yandex, follow the link at the beginning of the article, you can read this directive in detail.

The special characters * and $ are used in robots.txt to indicate the paths of the Disallow and Allow directives:

- The special character “*” means any sequence of characters. For example, Disallow: /*?* means a ban on any pages where “?” appears in the address, regardless of what characters come before and after this character. By default, the special character “*” is added to the end of each rule, even if it is not specified specifically.

- The “$” symbol cancels the “*” at the end of the rule and means strict matching. For example, the Disallow: /*?$ directive will prohibit indexing of pages ending with the “?” character.

Example robots.txt for WordPress

Here is an example of my robots.txt file for a blog on the WordPress engine:

| User-agent: * Disallow: /cgi-bin Disallow: /wp-admin Disallow: /wp-includes Disallow: /wp-content/plugins Disallow: /wp-content/cache Disallow: /wp-content/themes Disallow: / trackback Disallow: */trackback Disallow: */*/trackback Disallow: /feed/ Disallow: */*/feed/*/ Disallow: */feed Disallow: /*?* Disallow: /?s= User-agent: Yandex Disallow: /cgi-bin Disallow: /wp-admin Disallow: /wp-includes Disallow: /wp-content/plugins Disallow: /wp-content/cache Disallow: /wp-content/themes Disallow: /trackback Disallow: */ trackback Disallow: */*/trackback Disallow: /feed/ Disallow: */*/feed/*/ Disallow: */feed Disallow: /*?* Disallow: /?.ru/sitemap.xml..xml |

User-agent: * Disallow: /cgi-bin Disallow: /wp-admin Disallow: /wp-includes Disallow: /wp-content/plugins Disallow: /wp-content/cache Disallow: /wp-content/themes Disallow: / trackback Disallow: */trackback Disallow: */*/trackback Disallow: /feed/ Disallow: */*/feed/*/ Disallow: */feed Disallow: /*?* Disallow: /?s= User-agent: Yandex Disallow: /cgi-bin Disallow: /wp-admin Disallow: /wp-includes Disallow: /wp-content/plugins Disallow: /wp-content/cache Disallow: /wp-content/themes Disallow: /trackback Disallow: */ trackback Disallow: */*/trackback Disallow: /feed/ Disallow: */*/feed/*/ Disallow: */feed Disallow: /*?* Disallow: /?.ru/sitemap.xml..xml

In order not to fool yourself with creating the correct robots.txt for WordPress, you can use this file. There are no problems with indexing. I have a copy protection script, so it will be more convenient to download ready-made robots.txt and upload it to your hosting. Just don’t forget to replace the name of my site with yours in the Host and Sitemap directives.

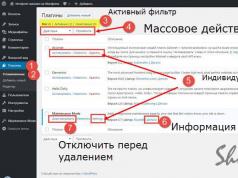

Useful additions for properly setting up the robots.txt file for WordPress

If tree comments are installed on your WordPress blog, then they create duplicate pages of the form ?replytocom= . In robots.txt, such pages are closed with the Disallow: /*?* directive. But this is not a solution and it is better to remove the bans and fight replytocom in another way. What, .

Thus, the current robots.txt as of July 2014 looks like this:

| User-agent: * Disallow: /wp-includes Disallow: /wp-feed Disallow: /wp-content/plugins Disallow: /wp-content/cache Disallow: /wp-content/themes User-agent: Yandex Disallow: /wp -includes Disallow: /wp-feed Disallow: /wp-content/plugins Disallow: /wp-content/cache Disallow: /wp-content/themes Host: site.ru User-agent: Googlebot-Image Allow: /wp-content /uploads/ User-agent: YandexImages Allow: /wp-content/uploads/ Sitemap: http://site.ru/sitemap.xml |

User-agent: * Disallow: /wp-includes Disallow: /wp-feed Disallow: /wp-content/plugins Disallow: /wp-content/cache Disallow: /wp-content/themes User-agent: Yandex Disallow: /wp -includes Disallow: /wp-feed Disallow: /wp-content/plugins Disallow: /wp-content/cache Disallow: /wp-content/themes Host: site.ru User-agent: Googlebot-Image Allow: /wp-content /uploads/ User-agent: YandexImages Allow: /wp-content/uploads/ Sitemap: http://site.ru/sitemap.xml

It additionally sets out the rules for image indexing robots.

User-agent: Mediapartners-Google

Disallow:

If you plan to promote category or tag pages, then you should open them to robots. For example, on a blog website, categories are not closed from indexing, since they only publish small announcements of articles, which is quite insignificant in terms of duplicating content. And if you use the display of quotes in the blog feed, which are filled with unique announcements, then there will be no duplication at all.

If you do not use the above plugin, you can specify in your robots.txt file to prohibit indexing of tags, categories, and archives. For example, adding the following lines:

Disallow: /author/

Disallow: /tag

Disallow: /category/*/*

Disallow: /20*

Don't forget to check the robots.txt file in the Yandex.Webmaster panel, and then re-upload it to your hosting.

If you have any additions to configure robots.txt, write about it in the comments. Now watch a video about what it is and how to create the correct robots.txt for a site, how to prohibit indexing in the robots.txt file and correct errors.

Each blog has its own answer to this. Therefore, newcomers to search engine promotion often get confused, like this:

What kind of robots ti ex ti?

File robots.txt or index file- a regular text document in UTF-8 encoding, valid for the http, https, and FTP protocols. The file gives search robots recommendations: which pages/files should be crawled. If the file contains characters in an encoding other than UTF-8, search robots may process them incorrectly. The rules listed in the robots.txt file are only valid for the host, protocol, and port number where the file is located.

The file should be located in the root directory as a plain text document and be available at: https://site.com.ua/robots.txt.

In other files it is customary to mark BOM (Byte Order Mark). This is a Unicode character that is used to determine the byte sequence when reading information. Its code character is U+FEFF. At the beginning of the robots.txt file, the byte sequence mark is ignored.

Google has set a size limit for the robots.txt file - it should not weigh more than 500 KB.

Okay, if you are interested in purely technical details, the robots.txt file is a description in Backus-Naur form (BNF). This uses the rules of RFC 822.

When processing rules in the robots.txt file, search robots receive one of three instructions:

- partial access: scanning of individual website elements is available;

- full access: you can scan everything;

- complete ban: the robot cannot scan anything.

When scanning the robots.txt file, robots receive the following responses:

- 2xx — the scan was successful;

- 3xx — the search robot follows the redirect until it receives another response. Most often, there are five attempts for the robot to receive a response other than a 3xx response, then a 404 error is logged;

- 4xx — the search robot believes that it is possible to crawl the entire content of the site;

- 5xx — are assessed as temporary server errors, scanning is completely prohibited. The robot will access the file until it receives another response. The Google search robot can determine whether the response of missing pages on the site is configured correctly or incorrectly, that is, if instead of a 404 error the page returns a 5xx response, in this case the page will be processed with response code 404.

It is not yet known how the robots.txt file is processed, which is inaccessible due to server problems with Internet access.

Why do you need a robots.txt file?

For example, sometimes robots should not visit:

- pages with personal information of users on the site;

- pages with various forms for sending information;

- mirror sites;

- search results pages.

Important: even if the page is in the robots.txt file, there is a possibility that it will appear in the results if a link to it was found within the site or somewhere on an external resource.

This is how search engine robots see a site with and without a robots.txt file:

Without robots.txt, information that should be hidden from prying eyes may end up in the search results, and because of this, both you and the site will suffer.

This is how the search engine robot sees the robots.txt file:

Google detected the robots.txt file on the site and found the rules by which the site's pages should be crawled

How to create a robots.txt file

Using notepad, Notepad, Sublime, or any other text editor.

User-agent - business card for robots

User-agent—a rule about which robots need to view the instructions described in the robots.txt file. There are currently 302 known search robots

It says that we specify rules in robots.txt for all search robots.

For Google, the main robot is Googlebot. If we want to take into account only this, the entry in the file will be like this:

In this case, all other robots will crawl the content based on their directives for processing an empty robots.txt file.

For Yandex, the main robot is... Yandex:

Other special robots:

- Mediapartners-Google— for the AdSense service;

- AdsBot-Google— to check the quality of the landing page;

- YandexImages— Yandex.Images indexer;

- Googlebot-Image- for pictures;

- YandexMetrika— Yandex.Metrica robot;

- YandexMedia— a robot that indexes multimedia data;

- YaDirectFetcher— Yandex.Direct robot;

- Googlebot-Video— for video;

- Googlebot-Mobile- for mobile version;

- YandexDirectDyn— dynamic banner generation robot;

- YandexBlogs— a blog search robot that indexes posts and comments;

- YandexMarket— Yandex.Market robot;

- YandexNews— Yandex.News robot;

- YandexDirect— downloads information about the content of partner sites of the Advertising Network in order to clarify their topics for the selection of relevant advertising;

- YandexPagechecker— micro markup validator;

- YandexCalendar— Yandex.Calendar robot.

Disallow - placing “bricks”

It is worth using if the site is in the process of improvements, and you do not want it to appear in the search results in its current state.

It is important to remove this rule as soon as the site is ready for users to see it. Unfortunately, many webmasters forget about this.

Example. How to set up a Disallow rule to advise robots not to view the contents of a folder /papka/:

This line prohibits indexing all files with the extension .gif

Allow - we direct the robots

Allow allows scanning of any file/directive/page. Let’s say you want robots to be able to view only pages that start with /catalog, and to close all other content. In this case, the following combination is prescribed:

Allow and Disallow rules are sorted by URL prefix length (smallest to largest) and applied sequentially. If more than one rule matches a page, the robot selects the last rule in the sorted list.

Host - select a mirror site

Host is one of the mandatory rules for robots.txt; it tells the Yandex robot which of the site’s mirrors should be considered for indexing.

A site mirror is an exact or almost exact copy of a site, available at different addresses.

The robot will not get confused when finding site mirrors and will understand that the main mirror is specified in the robots.txt file. The site address is indicated without the “http://” prefix, but if the site runs on HTTPS, the “https://” prefix must be specified.

How to write this rule:

An example of a robots.txt file if the site runs on the HTTPS protocol:

Sitemap - medical site map

Sitemap tells robots that all site URLs required for indexing are located at http://site.ua/sitemap.xml. With each crawl, the robot will look at what changes were made to this file and quickly update information about the site in the search engine databases.

Crawl-delay - stopwatch for weak servers

Crawl-delay is a parameter that can be used to set the period after which site pages will load. This rule is relevant if you have a weak server. In this case, there may be long delays when search robots access the site pages. This parameter is measured in seconds.

Clean-param - duplicate content hunter

Clean-param helps deal with get parameters to avoid duplication of content that may be available at different dynamic addresses (with question marks). Such addresses appear if the site has various sortings, session ids, and so on.

Let's say the page is available at the following addresses:

www.site.com/catalog/get_phone.ua?ref=page_1&phone_id=1

www.site.com/catalog/get_phone.ua?ref=page_2&phone_id=1

www.site.com/catalog/get_phone.ua?ref=page_3&phone_id=1

In this case, the robots.txt file will look like this:

Here ref indicates where the link comes from, so it is written at the very beginning, and only then the rest of the address is indicated.

But before moving on to the reference file, you still need to learn about some signs that are used when writing a robots.txt file.

Symbols in robots.txt

The main characters of the file are “/, *, $, #”.

By using slash "/" we show that we want to prevent detection by robots. For example, if there is one slash in the Disallow rule, we prohibit scanning the entire site. Using two slash characters you can prevent scanning of a specific directory, for example: /catalog/.

This entry says that we prohibit scanning the entire contents of the catalog folder, but if we write /catalog, we will prohibit all links on the site that begin with /catalog.

Asterisk "*" means any sequence of characters in the file. It is placed after each rule.

This entry says that all robots should not index any files with a .gif extension in the /catalog/ folder

Dollar sign «$» limits the actions of the asterisk sign. If you want to block the entire contents of the catalog folder, but you cannot block URLs that contain /catalog, the entry in the index file will be like this:

Grid "#" used for comments that a webmaster leaves for himself or other webmasters. The robot will not take them into account when scanning the site.

For example:

What an ideal robots.txt looks like

The file opens the contents of the site for indexing, the host is registered and a site map is indicated, which will allow search engines to always see the addresses that should be indexed. The rules for Yandex are specified separately, since not all robots understand the Host instructions.

But do not rush to copy the contents of the file to yourself - each site must have unique rules, which depend on the type of site and CMS. Therefore, it’s worth remembering all the rules when filling out the robots.txt file.

How to check your robots.txt file

If you want to know whether the robots.txt file was filled out correctly, check it in webmaster tools Google and Yandex. Simply enter the source code of the robots.txt file into the form via the link and specify the site to be checked.

How not to fill out the robots.txt file

Often, when filling out an index file, annoying mistakes are made, and they are associated with ordinary inattention or haste. Below is a chart of errors that I encountered in practice.

2. Writing several folders/directories in one Disallow statement:

Such an entry can confuse search robots; they may not understand what exactly they should not index: either the first folder or the last one, so you need to write each rule separately.

3. The file itself must be called only robots.txt, and not Robots.txt, ROBOTS.TXT or anything else.

4. You cannot leave the User-agent rule empty - you need to say which robot should take into account the rules written in the file.

5. Extra characters in the file (slashes, asterisks).

6. Adding pages to the file that should not be in the index.

Non-standard use of robots.txt

In addition to direct functions, the index file can become a platform for creativity and a way to find new employees.

Here's a site where robots.txt is itself a small site with work elements and even an ad unit.

The file is mainly used by SEO agencies as a platform for searching for specialists. Who else might know about its existence? :)

And Google has a special file humans.txt, so that you do not allow yourself to think about discrimination against leather and meat specialists.

conclusions

With the help of Robots.txt you can give instructions to search robots, advertise yourself, your brand, and look for specialists. This is a great field for experimentation. The main thing is to remember about filling out the file correctly and typical mistakes.

Rules, also known as directives, also known as instructions in the robots.txt file:

- User-agent - a rule about which robots need to view the instructions described in robots.txt.

- Disallow gives recommendations on what information should not be scanned.

- Sitemap tells robots that all site URLs required for indexing are located at http://site.ua/sitemap.xml.

- The Host tells the Yandex robot which of the site mirrors should be considered for indexing.

- Allow allows scanning of any file/directive/page.

Signs when compiling robots.txt:

- The dollar sign "$" limits the actions of the asterisk sign.

- Using the slash “/” we indicate that we want to hide it from detection by robots.

- The asterisk "*" means any sequence of characters in the file. It is placed after each rule.

- The hash "#" is used to indicate comments that a webmaster writes for himself or other webmasters.

Use the index file wisely - and the site will always be in the search results.

The robot.txt file is required for most websites.

Every SEO optimizer must understand the meaning of this file, and also be able to write the most popular directives.

Properly composed robots improve the site’s position in search results and, among other promotion methods, is an effective SEO tool.

To understand what robot.txt is and how it works, let’s remember how search engines work.

To check for it, enter your root domain in the address bar, then add /robots.txt to the end of the URL.

For example, the Moz robot file is located at: moz.com/robots.txt. We enter and get the page:

Instructions for the "robot"

How to create a robots.txt file?

3 types of instructions for robots.txt.

If you find that your robots.txt file is missing, it’s easy to create one.

As already mentioned at the beginning of the article, this is a regular text file in the root directory of the site.

It can be done through the admin panel or file manager, with which the programmer works with files on the site.

We will figure out how and what to write there as the article progresses.

Search engines receive three types of instructions from this file:

- scan everything, that is, full access (Allow);

- you can’t scan anything - a complete ban (Disallow);

- You cannot scan individual elements (which ones are indicated) - partial access.

In practice it looks like this:

Please note that the page may still appear in the search results if it is linked to on or off this site.

To understand this better, let's study the syntax of this file.

Syntax Robots.Txt

Robots.txt: what does it look like?

Important points: what you should always remember about robots.

Seven common terms that are often found on websites.

In its simplest form, the robot looks like this:

User agent: [name of the system for which we are writing directives] Disallow: Sitemap: [indicate where we have the site map] # Rule 1 User agent: Googlebot Disallow: /prim1/ Sitemap: http://www.nashsite.com /sitemap.xml

Together, these three lines are considered the simplest robots.txt.

Here we prevented the bot from indexing the URL: http://www.nashsite.com/prim1/ and indicated where the site map is located.

Please note that in the robots file, the set of directives for one user agent (the search engine) is separated from the set of directives for another by a line break.

In a file with multiple search engine directives, each ban or allow applies only to the search engine specified in that specific block of lines.

This is an important point and should not be forgotten.

If a file contains rules that apply to multiple user agents, the system will give priority to directives that are specific to the specified search engine.

Here's an example:

In the illustration above, MSNbot, discobot and Slurp have individual rules that will work only for these search engines.

All other user agents follow the general directives in the user-agent group: *.

The syntax of robots.txt is absolutely not complicated.

There are seven common terms that are often found on websites.

- User-agent: a specific web search engine (search engine bot) that you give crawling instructions to. A list of most user agents can be found here. In total, it has 302 systems, of which the two most relevant are Google and Yandex.

- Disallow: A disallow command that tells the agent not to visit the URL. Only one "disallow" line is allowed per URL.

- Allow (only applicable to Googlebot): The command tells the bot that it can access a page or subfolder even if its parent page or subfolder has been closed.

- Crawl-delay: How many milliseconds the search engine should wait before loading and crawling the page content.

Please note - Googlebot does not support this command, but crawl speed can be manually set in Google Search Console.

- Sitemap: Used to call the location of any XML maps associated with this URL. This command is only supported by Google, Ask, Bing and Yahoo.

- Host: this directive indicates the main mirror of the site, which should be taken into account when indexing. It can only be registered once.

- Clean-param: This command is used to combat duplicate content during dynamic addressing.

Regular Expressions

Regular expressions: what they look like and what they mean.

How to allow and deny crawling in robots.txt.

In practice, robots.txt files can grow and become quite complex and unwieldy.

The system makes it possible to use regular expressions to provide the required functionality of the file, that is, to flexibly work with pages and subfolders.

- * is a wildcard and means that the directive works for all search bots;

- $ matches the end of a URL or string;

- # used for developer and optimizer comments.

Here are some examples of robots.txt for http://www.nashsite.com

Robots.txt file URL: www.nashsite.com/robots.txt

User-agent: * (that is, for all search engines) Disallow: / (slash indicates the root directory of the site)

We just stopped all search engines from crawling and indexing the entire site.

How often is this action required?

Not often, but there are cases when it is necessary for a resource not to participate in search results, and visits to be made through special links or through corporate authorization.

This is how the internal websites of some companies work.

In addition, such a directive is prescribed if the site is at the stage of development or modernization.

If you need to allow the search engine to crawl everything that is on the site, then you need to write the following commands in robots.txt:

User-agent: * Disallow:

There is nothing in the prohibition (disallow), which means everything is possible.

Using this syntax in a robots.txt file allows crawlers to crawl all pages on http://www.nashsite.com, including the home page, admin page, and contact page.

Blocking specific search bots and specific folders

Syntax for the Google search engine (Googlebot).

Syntax for other search agents.

User-agent: Googlebot Disallow: /example-subfolder/

This syntax only tells the Google search engine (Googlebot) not to crawl the address: www.nashsite.com/example-subfolder/.

Blocking individual pages for specified bots:

User-agent: Bingbot Disallow: /example-subfolder/blocked-page.html

This syntax tells Bingbot (the name of the Bing search agent) only not to visit the page at: www.nashsite.com/example-subfolder/blocked-page.

That's basically it.

If you master these seven commands and three symbols and understand the logic of application, you will be able to write the correct robots.txt.

Why it doesn't work and what to do

Algorithm of the main action.

Other methods.

Incorrect robots.txt is a problem.

After all, identifying an error and then understanding it will take time.

Re-read the file, make sure you haven't blocked anything unnecessary.

If after a while it turns out that the page still hangs in the search results, look in Google Webmaster to see if the search engine has re-indexed the site, and check if there are any external links to the closed page.

Because if they exist, then it will be more difficult to hide it from the search results; other methods will be required.

Well, before using, check this file with a free tester from Google.

Timely analysis helps to avoid troubles and saves time.

This is a text file (document in .txt format) containing clear instructions for indexing a specific site. The file indicates to search engines which pages of a web resource need to be indexed and which should be prohibited from indexing.

It would seem, why prohibit the indexing of some site content? Let the search robot index everything indiscriminately, guided by the principle: the more pages, the better! But that's not true.

Not all the content that makes up a website is needed by search robots. There are system files, there are duplicate pages, there are keyword categories and much more that do not necessarily need to be indexed. Otherwise, the following situation cannot be ruled out.

When a search robot comes to your site, the first thing it does is try to find the notorious robots.txt. If this file is not detected by it or is detected, but it is compiled incorrectly (without the necessary prohibitions), the search engine “messenger” begins to study the site at its own discretion.

In the process of such studying, he indexes everything and it is far from a fact that he starts with those pages that need to be entered into the search first (new articles, reviews, photo reports, etc.). Naturally, in this case, the indexing of the new site may take some time.

In order to avoid such an unenviable fate, the webmaster needs to take care in time to create the correct robots.txt file.

“User-agent:” is the main directive of robots.txt

In practice, directives (commands) are written in robots.txt using special terms, the main one of which can be considered the directive “ User-agent: " The latter is used to specify the search robot, which will be given certain instructions in the future. For example:

- User-agent: Googlebot– all commands that follow this basic directive will relate exclusively to the Google search engine (its indexing robot);

- User-agent: Yandex– the addressee in this case is the domestic search engine Yandex.

The robots.txt file can be used to address all other search engines combined. The command in this case will look like this: User-agent: *. The special symbol “*” usually means “any text”. In our case, any search engines other than Yandex. Google, by the way, also takes this directive personally, unless you contact it personally.

“Disallow:” command – prohibiting indexing in robots.txt

The main “User-agent:” directive addressed to search engines can be followed by specific commands. Among them, the most common is the directive “ Disallow: " Using this command, you can prevent the search robot from indexing the entire web resource or some part of it. It all depends on what extension this directive will have. Let's look at examples:

User-agent: Yandex Disallow: /

This kind of entry in the robots.txt file means that the Yandex search robot is not allowed to index this site at all, since the prohibitory sign “/” stands alone and is not accompanied by any clarifications.

User-agent: Yandex Disallow: /wp-admin

As you can see, this time there are clarifications and they concern the system folder wp-admin V . That is, the indexing robot, using this command (the path specified in it), will refuse to index this entire folder.

User-agent: Yandex Disallow: /wp-content/themes

Such an instruction to the Yandex robot presupposes its admission to a large category " wp-content ", in which it can index all contents except " themes ».

Let’s explore the “forbidden” capabilities of the robots.txt text document further:

User-agent: Yandex Disallow: /index$

In this command, as follows from the example, another special sign “$” is used. Its use tells the robot that it cannot index those pages whose links contain the sequence of letters " index " At the same time, index a separate site file with the same name “ index.php » the robot is not prohibited. Thus, the “$” symbol is used when a selective approach to prohibiting indexing is necessary.

Also, in the robots.txt file, you can prohibit indexing of individual resource pages that contain certain characters. It might look like this:

User-agent: Yandex Disallow: *&*

This command tells the Yandex search robot not to index all those pages on a website whose URLs contain the “&” character. Moreover, this sign in the link must appear between any other symbols. However, there may be another situation:

User-agent: Yandex Disallow: *&

Here the indexing ban applies to all those pages whose links end in “&”.

If there should be no questions about the ban on indexing system files of a site, then such questions may arise regarding the ban on indexing individual pages of the resource. Like, why is this necessary in principle? An experienced webmaster may have many considerations in this regard, but the main one is the need to get rid of duplicate pages in the search. Using the “Disallow:” command and the group of special characters discussed above, you can deal with “unwanted” pages quite simply.

“Allow:” command – allowing indexing in robots.txt

The antipode of the previous directive can be considered the command “ Allow: " Using the same clarifying elements, but using this command in the robots.txt file, you can allow the indexing robot to enter the site elements you need into the search database. To confirm this, here is another example:

User-agent: Yandex Allow: /wp-admin

For some reason, the webmaster changed his mind and made the appropriate adjustments to robots.txt. As a consequence, from now on the contents of the folder wp-admin officially approved for indexing by Yandex.

Even though the Allow: command exists, it is not used very often in practice. By and large, there is no need for it, since it is applied automatically. The site owner just needs to use the “Disallow:” directive, prohibiting this or that content from being indexed. After this, all other content of the resource that is not prohibited in the robots.txt file is perceived by the search robot as something that can and should be indexed. Everything is like in jurisprudence: “Everything that is not prohibited by law is permitted.”

"Host:" and "Sitemap:" directives

The overview of important directives in robots.txt is completed by the commands “ Host: " And " Sitemap: " As for the first, it is intended exclusively for Yandex, indicating to it which site mirror (with or without www) is considered the main one. For example, a site might look like this:

User-agent: Yandex Host: website

User-agent: Yandex Host: www.site

Using this command also avoids unnecessary duplication of site content.

In turn, the directive “ Sitemap: » indicates to the indexing robot the correct path to the so-called Site Map - files sitemap.xml And sitemap.xml.gz (in the case of CMS WordPress). A hypothetical example might be:

User-agent: * Sitemap: http://site/sitemap.xml Sitemap: http://site/sitemap.xml.gz

Writing this command in the robots.txt file will help the search robot index the Site Map faster. This, in turn, will also speed up the process of getting web resource pages into search results.

The robots.txt file is ready - what next?

Let's assume that you, as a novice webmaster, have mastered the entire array of information that we have given above. What to do after? Create a robots.txt text document taking into account the features of your site. To do this you need:

- use a text editor (for example, Notepad) to compose the robots.txt you need;

- check the correctness of the created document, for example, using this Yandex service;

- using an FTP client, upload the finished file to the root folder of your site (in the case of WordPress, we are usually talking about the Public_html system folder).

Yes, we almost forgot. A novice webmaster, without a doubt, before experimenting himself, will first want to look at ready-made examples of this file performed by others. Nothing could be simpler. To do this, just enter in the address bar of your browser site.ru/robots.txt . Instead of “site.ru” - the name of the resource you are interested in. That's all.

Happy experimenting and thanks for reading!

The purpose of this guide is to help webmasters and administrators use robots.txt.

Introduction

The robot exemption standard is very simple at its core. In short, it works like this:

When a robot that follows the standard visits a site, it first requests a file called “/robots.txt.” If such a file is found, the Robot searches it for instructions prohibiting indexing certain parts of the site.

Where to place the robots.txt file

The robot simply requests the URL “/robots.txt” on your site; the site in this case is a specific host on a specific port.

| Site URL | Robots.txt file URL |

| http://www.w3.org/ | http://www.w3.org/robots.txt |

| http://www.w3.org:80/ | http://www.w3.org:80/robots.txt |

| http://www.w3.org:1234/ | http://www.w3.org:1234/robots.txt |

| http://w3.org/ | http://w3.org/robots.txt |

There can only be one file “/robots.txt” on the site. For example, you should not place the robots.txt file in user subdirectories - robots will not look for them there anyway. If you want to be able to create robots.txt files in subdirectories, then you need a way to programmatically collect them into a single robots.txt file located at the root of the site. You can use .

Remember that URLs are case sensitive and the file name “/robots.txt” must be written entirely in lowercase.

| Wrong location of robots.txt | |

| http://www.w3.org/admin/robots.txt | |

| http://www.w3.org/~timbl/robots.txt | The file is not located at the root of the site |

| ftp://ftp.w3.com/robots.txt | Robots do not index ftp |

| http://www.w3.org/Robots.txt | The file name is not in lowercase |

As you can see, the robots.txt file should be placed exclusively at the root of the site.

What to write in the robots.txt file

The robots.txt file usually contains something like:

User-agent: *

Disallow: /cgi-bin/

Disallow: /tmp/

Disallow: /~joe/

In this example, indexing of three directories is prohibited.

Note that each directory is listed on a separate line - you cannot write "Disallow: /cgi-bin/ /tmp/". You also cannot split one Disallow or User-agent statement into several lines, because Line breaks are used to separate instructions from each other.

Regular expressions and wildcards cannot be used either. The “asterisk” (*) in the User-agent instruction means “any robot”. Instructions like “Disallow: *.gif” or “User-agent: Ya*” are not supported.

The specific instructions in robots.txt depend on your site and what you want to prevent from being indexed. Here are some examples:

Block the entire site from being indexed by all robots

User-agent: *

Disallow: /

Allow all robots to index the entire site

User-agent: *

Disallow:

Or you can simply create an empty file “/robots.txt”.

Block only a few directories from indexing

User-agent: *

Disallow: /cgi-bin/

Disallow: /tmp/

Disallow: /private/

Prevent site indexing for only one robot

User-agent: BadBot

Disallow: /

Allow one robot to index the site and deny all others

User-agent: Yandex

Disallow:

User-agent: *

Disallow: /

Deny all files except one from indexing

This is quite difficult, because... there is no “Allow” statement. Instead, you can move all files except the one you want to allow for indexing into a subdirectory and prevent it from being indexed:

User-agent: *

Disallow: /docs/

Or you can prohibit all files prohibited from indexing:

User-agent: *

Disallow: /private.html

Disallow: /foo.html

Disallow: /bar.html